Python is a high-level, dynamically-typed language. It abstracts away memory management for the developer — but understanding how it works under the hood is crucial for writing efficient and memory-safe programs.

For the purposes of this article, I’ll focus on the memory management done by the default implementation of Python, CPython.

Table of Contents

Analogy

You can begin by thinking of a computer’s memory as an empty book intended for short stories. There’s nothing written on the pages yet. Eventually, different authors will come along. Each author wants some space to write their story in.

Since they aren’t allowed to write over each other, they must be careful about which pages they write in. Before they begin writing, they consult the manager of the book. The manager then decides where in the book they’re allowed to write.

Since this book is around for a long time, many of the stories in it are no longer relevant. When no one reads or references the stories, they are removed to make room for new stories.

In essence, computer memory is like that empty book. In fact, it’s common to call fixed-length contiguous blocks of memory pages, so this analogy holds pretty well.

The authors are like different applications or processes that need to store data in memory. The manager, who decides where the authors can write in the book, plays the role of a memory manager of sorts. The person who removed the old stories to make room for new ones is a garbage collector.

In Python, memory management is automatic, it involves handling a private heap that contains all Python objects and data structures. The Python memory manager internally ensures the efficient allocation and deallocation of this memory.

Basics

Everything in Python is an object

Everything in Python is an object, even types such as int and str.

PyObject

A PyObject is in fact just a Python object at the C level. And since integers in Python are objects, they are also PyObjects. It doesn’t matter whether it was written in Python or in C, it is a PyObject at the C level regardless.

The PyObject contains only two things:

- ob_refcnt: reference count

- ob_type: pointer to another type

The reference count is used for garbage collection. Then you have a pointer to the actual object type. That object type is just another struct that describes a Python object (such as a dict or int).

Each object has its own object-specific memory allocator that knows how to get the memory to store that object. Each object also has an object-specific memory deallocator that “frees” the memory once it’s no longer needed.

However, there’s an important factor in all this talk about allocating and freeing memory. Memory is a shared resource on the computer, and bad things can happen if two different processes try to write to the same location at the same time.

Memory Model Overview

Python’s memory management involves:

- Object-specific memory management

- Private heap allocation

- Automatic garbage collection

- Reference counting

- Cyclic garbage detection

All memory management is automatic but controllable to some extent.

Python Memory Architecture

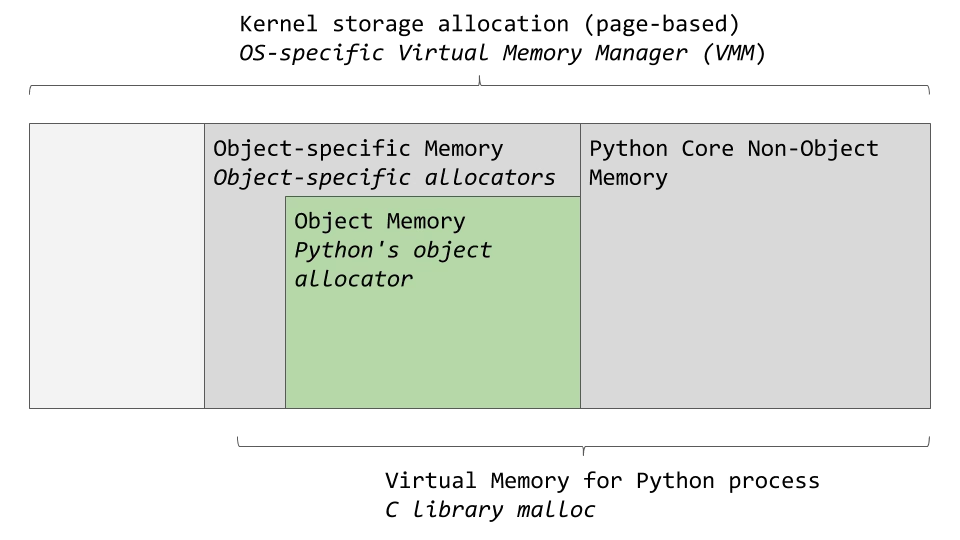

There are layers of abstraction from the physical hardware to CPython. The operating system (OS) abstracts the physical memory and creates a virtual memory layer that applications (including Python) can access.

An OS-specific virtual memory manager carves out a chunk of memory for the Python process. The darker gray boxes in the image below are now owned by the Python process.

Python uses a portion of the memory for internal use and non-object memory. The other portion is dedicated to object storage (your int, dict, and the like).

CPython has an object allocator that is responsible for allocating memory within the object memory area. This object allocator is where most of the magic happens. It gets called every time a new object needs space allocated or deleted.

Typically, the addition and removal of data for Python objects, such as lists and integers, doesn’t involve too much data at a time. So the design of the allocator is tuned to work well with small amounts of data at a time. It also tries not to allocate memory until it’s absolutely required.

Now we’ll look at CPython’s memory allocation strategy. First, we’ll talk about the 3 main pieces and how they relate to each other.

Python memory is divided into:

- Blocks: Smallest unit (used for objects < 512 bytes)

- Pools: Group of blocks of the same size

- Arenas: Group of pools (256 KB each)

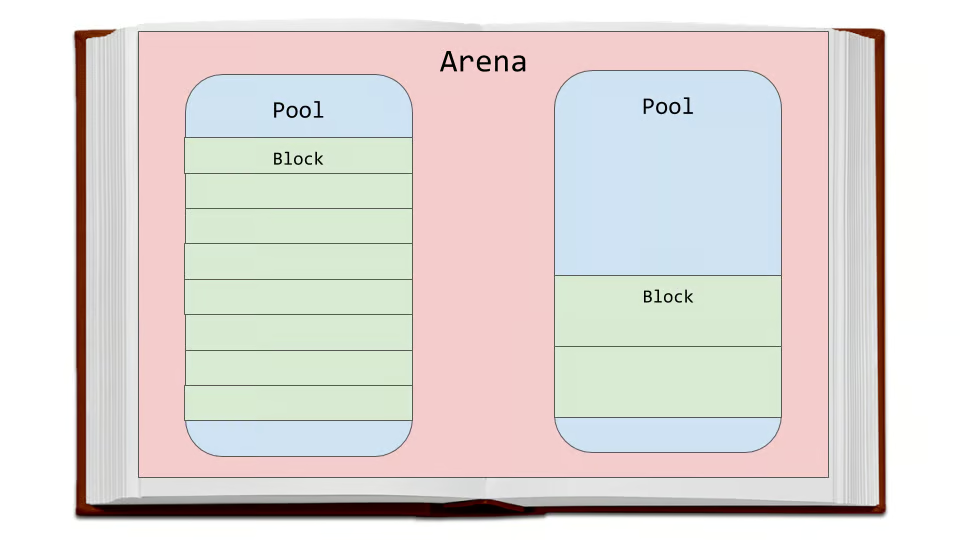

Arenas are the largest chunks of memory and are aligned on a page boundary in memory. A page boundary is the edge of a fixed-length contiguous chunk of memory that the OS uses. Python assumes the system’s page size is 256 kilobytes.

Within the arenas are pools, which are one virtual memory page (4 kilobytes). These are like the pages in our book analogy. These pools are fragmented into smaller blocks of memory.

All the blocks in a given pool are of the same “size class.” A size class defines a specific block size, given some amount of requested data.

Pools

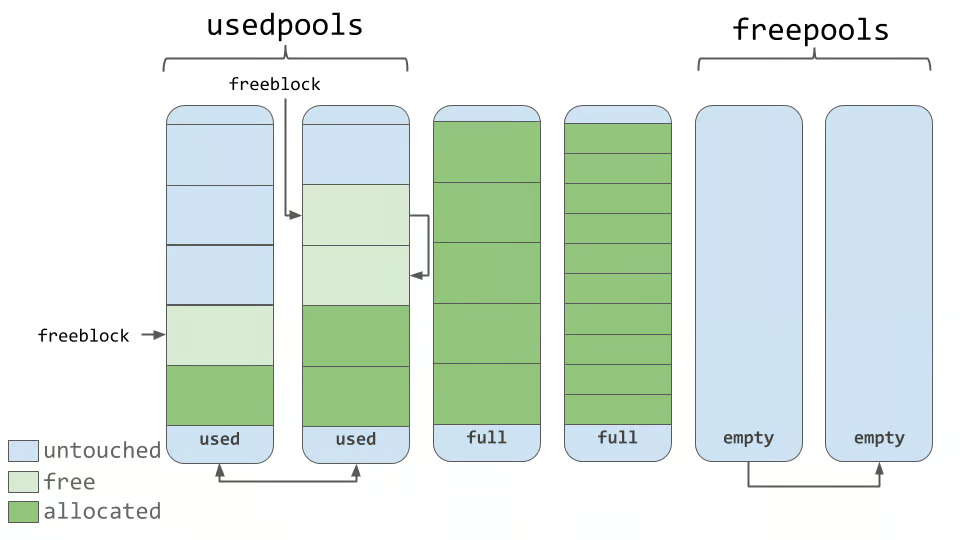

Pools are composed of blocks from a single size class. Each pool maintains a double-linked list to other pools of the same size class. In that way, the algorithm can easily find available space for a given block size, even across different pools.

A usedpools list tracks all the pools that have some space available for data for each size class. When a given block size is requested, the algorithm checks this usedpools list for the list of pools for that block size.

Pools themselves must be in one of 3 states: used, full, or empty. A used pool has available blocks for data to be stored. A full pool’s blocks are all allocated and contain data. An empty pool has no data stored and can be assigned any size class for blocks when needed.

A freepools list keeps track of all the pools in the empty state. But when do empty pools get used?

Assume your code needs an 8-byte chunk of memory. If there are no pools in usedpools of the 8-byte size class, a fresh empty pool is initialized to store 8-byte blocks. This new pool then gets added to the usedpools list so it can be used for future requests.

Say a full pool frees some of its blocks because the memory is no longer needed. That pool would get added back to the usedpools list for its size class.

You can see now how pools can move between these states (and even memory size classes) freely with this algorithm.

Blocks

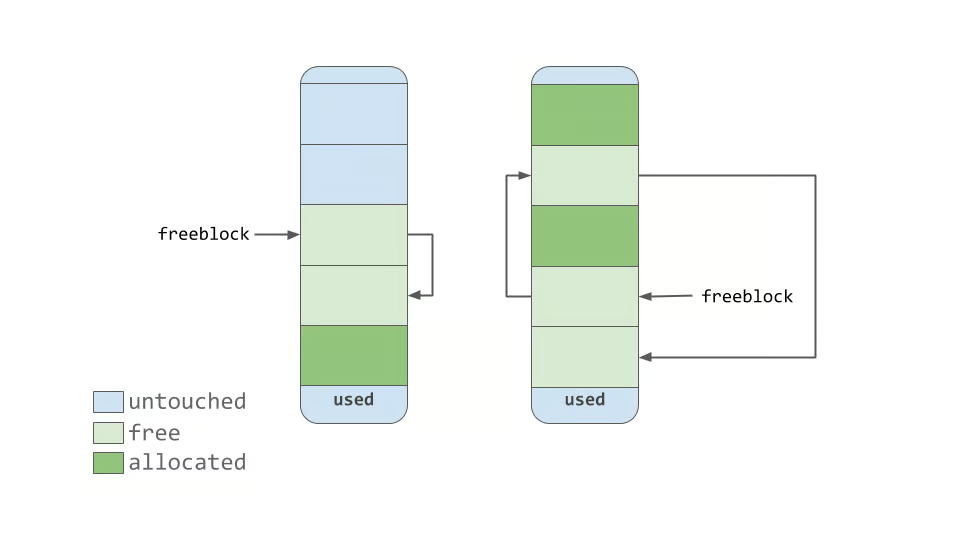

As seen in the diagram above, pools contain a pointer to their “free” blocks of memory. There’s a slight nuance to the way this works. This allocator “strives at all levels (arena, pool, and block) never to touch a piece of memory until it’s actually needed,”

That means that a pool can have blocks in 3 states. These states can be defined as follows:

- untouched: a portion of memory that has not been allocated

- free: a portion of memory that was allocated but later made “free” by CPython and that no longer contains relevant data

- allocated: a portion of memory that actually contains relevant data

The freeblock pointer points to a singly linked list of free blocks of memory. In other words, a list of available places to put data. If more than the available free blocks are needed, the allocator will get some untouched blocks in the pool.

As the memory manager makes blocks “free,” those now free blocks get added to the front of the freeblock list. The actual list may not be contiguous blocks of memory, like the first nice diagram. It may look something like the diagram below:

Arenas

Arenas contain pools. Those pools can be used, full, or empty. Arenas themselves don’t have as explicit states as pools do though.

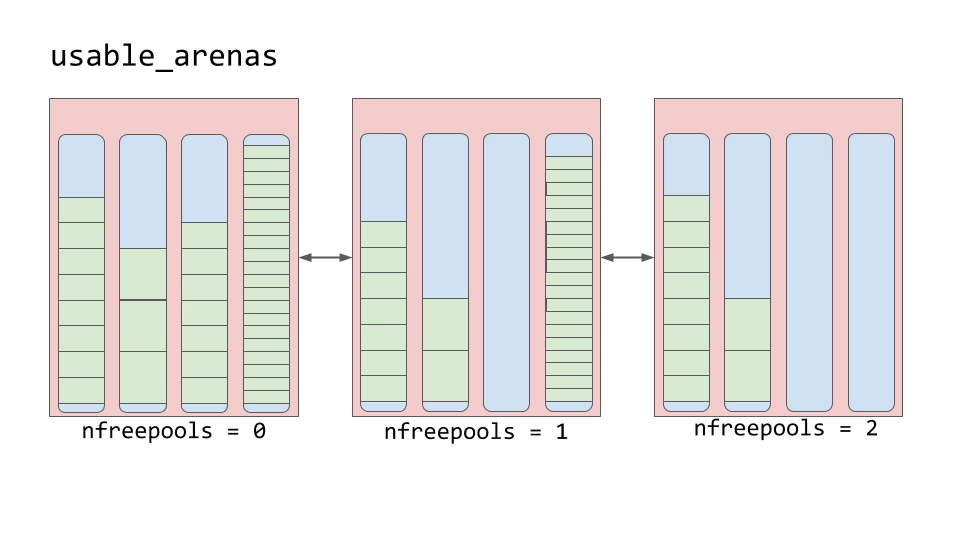

Arenas are instead organized into a doubly linked list called usable_arenas. The list is sorted by the number of free pools available. The fewer free pools, the closer the arena is to the front of the list.

This means that the arena that is the most full of data will be selected to place new data into. But why not the opposite? Why not place data where there’s the most available space?

This brings us to the idea of truly freeing memory. You’ll notice that I’ve been saying “free” in quotes quite a bit. The reason is that when a block is deemed “free”, that memory is not actually freed back to the operating system. The Python process keeps it allocated and will use it later for new data. Truly freeing memory returns it to the operating system to use.

Arenas are the only things that can truly be freed. So, it stands to reason that those arenas that are closer to being empty should be allowed to become empty. That way, that chunk of memory can be truly freed, reducing the overall memory footprint of your Python program.

Memory Allocation in Python

Python manages memory allocation in two primary ways − Stack and Heap.

| Memory Area | Purpose |

|---|---|

| Stack | Stores function calls, local variables |

| Heap | Stores objects, data structures (lists, dicts, etc.) |

Stack − Static Memory Allocation

In static memory allocation, memory is allocated at compile time and stored in the stack. This is typical for function call stacks and variable references. The stack is a region of memory used for storing local variables and function call information. It operates on a Last-In-First-Out (LIFO) basis, where the most recently added item is the first to be removed.

The stack is generally used for variables of primitive data types, such as numbers, booleans, and characters. These variables have a fixed memory size, which is known at compile-time.

Example

Let us look at an example to illustrate how variables of primitive types are stored on the stack. In the above example, variables named x, y, and z are local variables within the function named example_function(). They are stored on the stack, and when the function execution completes, they are automatically removed from the stack.

The stack is used for:

- Function call frames (each function call gets its own frame)

- Local primitive variables (like integers, floats, strings – if not inside a container)

- Keeps track of “where you are” in the program

- Immutable objects (int, str, tuple) are stored with their value fixed. Changes result in new allocations.

Characteristics:

- LIFO (Last In, First Out) structure

- Managed automatically (created and destroyed with function calls)

- Fast access

- Limited in size (stack overflow can occur in deep recursion)

Example

def foo():

a = 10 # 'a' is stored in stack

bar()

def bar():

b = 20 # 'b' is also in the stack

foo()Each call to foo() and bar() creates a new stack frame with its own variables.

Heap − Dynamic Memory Allocation

Dynamic memory allocation occurs at runtime for objects and data structures of non-primitive types. The actual data of these objects is stored in the heap, while references to them are stored on the stack.

The heap is used for:

- All Python objects and data structures (list, dict, class instances)

- Any variable whose size may not be known at compile time

- Mutable objects (list, dict, set, custom classes) are stored in heap and can be changed in-place.

Characteristics:

- Managed by Python runtime (interpreter and garbage collector)

- Larger and slower than the stack

- Shared across different functions

Example

a = [1, 2, 3] # list is created in the heap

b = {"name": "Alice"} # dict is in heapThe variables a and b are references (pointers) in the stack that point to the actual objects in the heap.

Why we need both heap and stack

The reason some variables are stored in the stack and others in the heap comes down to trade-offs between speed, size, lifetime, and complexity of memory management.

Stack is for Simple, Temporary Things

- Very fast to allocate/deallocate (LIFO structure)

- Automatically managed — when a function exits, its stack memory is cleaned

- Suitable for small, short-lived variables

Heap is for Complex or Long-Lived Data

- Size may not be known at compile time

- Needs to persist beyond a single function call

- May be shared or referenced in many places

How It Works Together

def foo():

x = 10 # `x` is in the stack

y = [1, 2, 3] # `y` is in the stack, but points to a list in the heap- x is a simple integer — it goes directly into the stack.

- y is a reference variable in the stack, pointing to a list object stored in the heap.

Even though the list [1, 2, 3] is created inside foo(), it’s placed in the heap because:

It’s a complex object

It might be passed outside the function or referenced elsewhere

Garbage collection is required for the heap, but not for the stack.

| Memory Type | Cleanup Required? | Why? |

|---|---|---|

| Stack | ❌ No GC needed | Lifetime is predictable and tied to function calls |

| Heap | ✅ Yes, needs GC | Lifetime is dynamic and unknown — objects may live long after creation |

Why Stack Doesn’t Need Garbage Collection

Stack works like a LIFO (Last In, First Out) container:

- When a function is called → a new stack frame is pushed

- When the function ends → the frame is automatically popped

All local variables in that frame disappear automatically — no tracking or cleanup needed.

Why Heap Needs Garbage Collection

Heap memory is for dynamic and persistent objects:

- Their lifetime is not tied to a function or block

- They may be:

- Shared across functions

- Created globally

- Mutated in-place

- Stored in containers

So Python can’t automatically know when to delete them — it needs help.

def foo():

a = [1, 2, 3] # allocated in heap

return a

x = foo() # Now x refers to the same list outside foo()- Even after foo() ends, the list lives on in the heap.

- Only when no variable points to it anymore, Python can safely delete it.

- This requires reference counting and garbage collection.

What Happens Without GC?

Without garbage collection:

- Heap memory would fill up over time with unused (but still “live”) objects

- This is called a memory leak

- Over time → your program slows down, crashes, or hangs

| Feature | Stack | Heap |

|---|---|---|

| Lifetime known at compile/runtime | Yes | No |

| Auto cleanup when function ends | Yes | No |

| Needs garbage collection | No | Yes |

| Who manages it | Python runtime (automatic) | Python’s garbage collector |

Analogy

Stack: Like using a whiteboard — write → erase when done. You don’t clean it; it cleans itself.

Heap: Like a table full of papers. You must manually trash the ones you don’t need — otherwise they pile up.

Garbage Collection

Garbage Collection in Python is the process of automatically freeing up memory that is no longer in use by objects, making it available for other objects. Pythons garbage collector runs during program execution and activates when an object’s reference count drops to zero.

Reference Counting

Python’s primary garbage collection mechanism is reference counting. Every object in Python maintains a reference count that tracks how many aliases (or references) point to it. When an object’s reference count drops to zero, the garbage collector deallocates the object.

Working of the reference counting as follows −

- Increasing Reference Count− It happens when a new reference to an object is created, the reference count increases.

- Decreasing Reference Count− When a reference to an object is removed or goes out of scope, the reference count decreases.

Example

import sys

# Create a string object

name = "Backendmesh"

print("Initial reference count:", sys.getrefcount(name))

# Assign the same string to another variable

other_name = "Backendmesh"

print("Reference count after assignment:", sys.getrefcount(name))

# Concatenate the string with another string

string_sum = name + ' Python'

print("Reference count after concatenation:", sys.getrefcount(name))

# Put the name inside a list multiple times

list_of_names = [name, name, name]

print("Reference count after creating a list with 'name' 3 times:", sys.getrefcount(name))

# Deleting one more reference to 'name'

del other_name

print("Reference count after deleting 'other_name':", sys.getrefcount(name))

# Deleting the list reference

del list_of_names

print("Reference count after deleting the list:", sys.getrefcount(name))

'''

Output

Initial reference count: 4

Reference count after assignment: 5

Reference count after concatenation: 5

Reference count after creating a list with 'name' 3 times: 8

Reference count after deleting 'other_name': 7

Reference count after deleting the list: 4

'''

How python know reference count become zero?

Python keeps track of reference counts automatically and immediately — every time you assign, pass, or delete a variable — by inserting low-level C code into the interpreter’s core.

Let see how Reference Counts works

Step 1: Every Python object has a refcount

All Python objects (in CPython) have a field called ob_refcnt.

Py_ssize_t ob_refcnt; // inside the objectStep 2: Python modifies refcount at every operation

Python increases or decreases the ob_refcnt during these actions:

| Action | What happens |

|---|---|

| Variable assignment | Refcount increases (INCREF) |

| Function argument passed | Refcount increases |

| Variable goes out of scope | Refcount decreases (DECREF) |

del statement used | Refcount decreases |

These are all implemented using macros in C:

Py_INCREF(obj); // increments obj->ob_refcnt

Py_DECREF(obj); // decrements obj->ob_refcntStep 3: What happens when refcount hits zero

Py_DECREF(obj);

if (obj->ob_refcnt == 0) {

// Object is no longer used

// Call destructor and free memory

obj->ob_type->tp_dealloc(obj);

}- When the refcount drops to 0, Python automatically calls the object’s deallocator

- This removes the object from the heap and frees up memory

So the object is destroyed immediately when no one is using it.

import sys

class MyClass:

def __del__(self):

print("Object destroyed")

obj = MyClass()

print(sys.getrefcount(obj)) # e.g., 2

ref = obj

print(sys.getrefcount(obj)) # e.g., 3

del obj

print(sys.getrefcount(ref)) # back to 2

del ref # Now refcount becomes 0 → triggers __del__()

'''

output

Object destroyed

'''Note: Even operations like: print(obj), temporarily increase the refcount during the function call.

But Wait: Above method Works for Non-Cyclic Objects

- Reference counting cannot detect cycles:

a.ref = b

b.ref = a- Even if no one refers to a or b, their counts are still 1.

- That’s why Python also uses a garbage collector to find such cycles.

Handling Circular References

A cyclic reference is when objects reference each other, forming a loop — so even though nothing else uses them, their reference counts never reach zero.

One of the main limitations of reference counting is its inability to handle circular references, where two or more objects reference each other, forming a cycle. In such cases, the reference count never drops to zero, leading to memory leaks.

class A:

pass

a = A()

b = A()

a.ref = b

b.ref = a

del a

del b # Both objects still exist, because they point to each other!- a points to b

- b points to a

- Both refcounts are still 1

- But no variable in your program can access them anymore

Reference counting fails here

Python needs a cycle-detecting garbage collector to clean them up.

Python’s Solution: Generational Garbage Collector

Python uses the gc module (enabled by default in CPython) that:

- Tracks all heap-allocated container objects

- Periodically scans them for unreachable cycles

- Breaks cycles and reclaims memory

How Does Python Detect Cycles?

Here’s the core logic Python’s GC uses (simplified):

Step 1: Track “container” objects

A container is any object that can hold references to other objects.

- Lists, dicts, sets, user-defined objects, etc.

- Anything that can hold a reference to another object

When you create one, Python adds it to an internal list of tracked objects.

Here are common built-in container types:

| Type | Example | What it can hold |

|---|---|---|

list | [1, "hi", [3, 4]] | any type of Python object |

tuple | (1, 2, "x") | immutable, but still a container |

dict | {"a": 1, "b": [2, 3]} | key-value pairs |

set | {1, 2, 3} | unique, unordered objects |

object | custom class instances | attributes can reference other objects |

deque | from collections module | double-ended queue |

Step 2: Scan for unreachable cycles

Periodically, or when memory is low, Python:

- Freezes the world briefly

- Runs a mark-and-sweep algorithm

Key Idea:

- Start from known live roots (global vars, stack frames)

- Follow all references

- Anything not reachable from roots but still self-referencing → must be garbage

These are collected even if their refcounts are > 0.

Step 3: Remove cycles

Python:

- Calls __del__() methods (if defined and safe)

- Frees the objects

- Updates internal tracking

Example

import gc

class Node:

def __init__(self):

self.ref = None

a = Node()

b = Node()

a.ref = b

b.ref = a

del a

del b # 👈 Now they form a cycle, no external references

# Manually run garbage collection

gc.collect()This will collect the cycle and free both Node instances.

How to Monitor Cycles

import gc

gc.set_debug(gc.DEBUG_LEAK)

gc.collect()

print("Unreachable:", gc.garbage)If Python can’t collect something (like objects with unsafe del()), it ends up in gc.garbage.

Summary

| Trigger | Python’s Response |

|---|---|

| You create mutual references | Tracked by GC |

| You delete your last external ref | GC can’t tell just from refcount |

| GC runs periodically | Detects unreachable cycles |

| GC marks them unreachable | Frees them if no __del__() conflict |

gc.collect() called manually | Forces detection and cleanup |

Anything not reachable from roots but still self-referencing → must be garbage

“Roots” = Active References

In Python, roots are:

- Variables in stack frames (like local variables inside running functions)

- Global variables

- Objects held by the interpreter itself (e.g., builtins)

- Any object the program can still access

Roots are starting points for reachability — if something can be reached from them, it is alive.

If there is no path from any root variable to an object, then the program has no way to use or touch that object anymore.

Why Can’t Refcount Handle This Alone?

So if two objects point to each other, they each have a count of 1:

a.ref → b

b.ref → aReferernce count will be alway greater than 0. Python take this as still alive objects, but in actual no one is using that objects. They are dead.

How Python distinguishes between a cycle that must be cleaned vs. one that’s still in use.

Case 1: Cycle Exists but Is Garbage → Must Be Cleaned

import gc

class Node:

def __init__(self, name):

self.name = name

self.ref = None

def __del__(self):

print(f"Deleted: {self.name}")

def create_cycle():

a = Node("A")

b = Node("B")

a.ref = b

b.ref = a # cyclic reference

create_cycle() # No variable keeps a reference to a or b

gc.collect() # Detects and cleans cycleWhat happens:

- a → b

- b → a

- Nothing else in the program refers to them

- They are unreachable from roots

Garbage Collector identifies the cycle and collects them

Deleted: B

Deleted: ACase 2: Cycle Exists but Is Still in Use → Must NOT Be Cleaned

import gc

class Node:

def __init__(self, name):

self.name = name

self.ref = None

def __del__(self):

print(f"Deleted: {self.name}")

a = Node("A")

b = Node("B")

a.ref = b

b.ref = a # ⛓️ cycle

# 👇 Keep reference to `a` in the program

print("Before GC:", gc.collect()) # GC runs, but does NOT clean `a` and `b`

del a # Now only `b` → `a`, `a` → `b`

print("After deleting a:", gc.collect()) # Now it can be cleaned

What happens:

Initially:

- a → b, b → a

- BUT a is a live variable

- So GC sees the cycle is reachable

- GC must not touch them

After del a:

- The cycle becomes unreachable

- GC safely collects them

When exactly does Python’s Garbage Collector (GC) run?

Python’s garbage collector runs periodically based on object allocation thresholds — not only when memory is low.

Python Uses a Generational GC

CPython (the default Python implementation) organizes objects into three generations:

| Generation | Description | Cleanup Frequency |

|---|---|---|

| Gen 0 | New, short-lived objects | 🟢 Frequent |

| Gen 1 | Survived Gen 0 collection | 🟡 Less frequent |

| Gen 2 | Long-lived or persistent | 🔴 Rare |

How Does Python Decide When to Run GC?

Python GC runs automatically using allocation/deallocation counters, not just memory pressure.

Each generation has a threshold:

import gc

print(gc.get_threshold()) # Default: (700, 10, 10)Default threshold:

- Collect Gen 0 when you’ve created 700+ new objects since the last collection

- If many objects survive → collect Gen 1

- If many survive Gen 1 → eventually Gen 2 is collected

import gc

print("Before:", gc.get_count())

for _ in range(1000):

_ = [1, 2, 3] # create lots of objects

print("After:", gc.get_count())You’ll notice the GC may run automatically after some number of allocations.

Advantages of Reference Counting

- Immediate Reclamation: Memory is freed immediately when the reference count drops to zero, which can lead to lower memory usage and less overhead compared to other garbage collection strategies that may only reclaim memory at certain intervals.

- Simplicity: The implementation of reference counting is straightforward, making it easier to understand and debug.

- Deterministic Destruction: Objects are destroyed as soon as they are no longer needed, which can be particularly useful for managing resources like file handles or network connections.

Disadvantages of Reference Counting

- Cyclic References: As mentioned, reference counting cannot handle cyclic references, which can lead to memory leaks.

- Performance Overhead: Incrementing and decrementing reference counts adds overhead to every assignment and deletion operation, which can impact performance, especially in programs with many short-lived objects.

- Memory Overhead: Each object must store its reference count, adding to the memory footprint of objects.

Strategies to Mitigate Cyclic References

To mitigate the issues caused by cyclic references, developers can take several approaches:

Weak References: Python’s weakref module allows the creation of weak references, which do not increase the reference count of the objects they refer to. This is useful for caching and other applications where circular references might otherwise occur.

import weakref

class Node:

def __init__(self, value):

self.value = value

self.next = None

node1 = Node(1)

node2 = Node(2)

node1.next = weakref.ref(node2)

node2.next = weakref.ref(node1)Manual Breakage: Developers can manually break reference cycles by setting references to None before an object is deleted.

node1.next = None

node2.next = None

del node1

del node2Garbage Collection: Python’s garbage collector complements reference counting by detecting and collecting objects involved in reference cycles. The garbage collector is part of the gc module, which provides the functionality to tune and control garbage collection.

import gc

gc.collect() # Forces garbage collectionGarbage Collection (GC)

GC is secondary mechanism to clean up cyclic references (e.g., objects referring to each other).

Example

class Node:

def __init__(self):

self.ref = None

a = Node()

b = Node()

a.ref = b

b.ref = a # Cycle!

del a

del b

# Memory leak unless GC collects itPython’s garbage collector detects and frees cycles like the one above using generational GC.

Python’s gc module handles this:

import gc

gc.collect() # Manual collection

GC Generations:

- Python uses generational garbage collection.

- Objects are grouped by age:

- Gen 0: Newly created

- Gen 1: Survived 1 collection

- Gen 2: Survived multiple collections

Objects that live longer are collected less frequently (performance optimization).

Thresholds:

You can inspect and modify GC thresholds:

gc.get_threshold() # Default: (700, 10, 10)

gc.set_threshold(1000, 15, 15)Summary of Differences

| Feature | Reference Counting | Garbage Collection |

|---|---|---|

| Trigger | Happens immediately | Happens periodically |

| Scope | Works for all objects | Focuses on cyclic references |

| Detection Method | Simple reference count | Traces object graphs for cycles |

| Strength | Fast and predictable | Handles memory leaks from cycles |

| Weakness | Can’t handle cycles | More overhead, less predictable timing |

Memory Allocation Internals

pymalloc

- Python has a custom allocator for small objects (< 512 bytes)

- Avoids OS malloc() for performance reasons

- Objects of similar sizes are grouped in pools (4096 bytes)

Larger objects (> 512 bytes)

- Allocated using standard malloc() via the OS

Memory Optimization Techniques in Python

Interning

Python “interns” commonly-used immutable objects to save memory.

a = 10

b = 10

print(id(a) == id(b)) # True (same memory)

x = "hello"

y = "hello"

print(id(x) == id(y)) # True

Use of Generators

Avoid building large lists in memory:

# Memory inefficient

squares = [x**2 for x in range(10**6)]

# Memory efficient

squares_gen = (x**2 for x in range(10**6))slots

In custom classes, avoid the dynamic __dict__ overhead:

class Person:

__slots__ = ['name', 'age'] # Reduces memory usageTools for Memory Monitoring

sys.getsizeof()

Get size of a Python object in bytes.

import sys

print(sys.getsizeof(123)) # 28 bytestracemalloc

Track memory allocations over time.

import tracemalloc

tracemalloc.start()

# Your code

snapshot = tracemalloc.take_snapshot()

for stat in snapshot.statistics('lineno')[:10]:

print(stat)memory_profiler

Line-by-line memory usage in Python.

Install

pip install memory_profilerExample

from memory_profiler import profile

@profile

def my_func():

...Execution

python -m memory_profiler your_script.pyPython vs Other Languages

| Feature | Python | C/C++ | Java |

|---|---|---|---|

| Manual Allocation | Not needed (automatic) | malloc/free | No |

| Garbage Collection | (Generational + cyclic) | (Manual) | (Mark & Sweep + G1 GC) |

| Reference Counting | Primary mechanism | No | NO |

Common Pitfalls

- Memory leaks due to lingering references in global scope, closures, or containers

- Circular references in custom classes not cleaned up (especially if you override __del__)

- Using large in-memory objects (e.g., list of billions of rows) without streaming

Summary

| Component | Description |

|---|---|

| Reference Counting | Primary memory release mechanism |

| Garbage Collection | Handles cyclic references |

| Private Heap | All Python objects live here |

| pymalloc | Specialized allocator for small objects |

| Generational GC | Optimizes collection frequency |

| Tools | gc, sys, tracemalloc, memory_profiler |

Conclusion

Understanding Python’s memory management system is key for writing efficient and optimized code. By comprehending how reference counting works, how the garbage collector handles cyclic references, and the memory allocation strategies employed by Python, we can avoid common issues and improve the performance of our applications. Utilizing techniques like weak references, manual garbage collection tuning, and memory profiling can further improve memory management practices.